Amazon product data extraction has become essential for e-commerce businesses, market researchers, and data analysts. Whether you’re monitoring competitor pricing, conducting product research, or building a price comparison tool, having reliable access to Amazon’s vast product catalog is crucial. This comprehensive guide will walk you through everything you need to know about using Pangolin’s Amazon Scraping API to extract product data efficiently and at scale.

Why Amazon Product Data Extraction Matters

Amazon hosts over 350 million products across multiple marketplaces worldwide. For businesses operating in the e-commerce space, access to this data provides invaluable insights:

- Competitive Intelligence: Track competitor pricing strategies, product launches, and inventory levels in real-time

- Market Research: Identify trending products, analyze customer sentiment through reviews, and discover market gaps

- Dynamic Pricing: Adjust your pricing strategy based on real-time market data to maximize profitability

- Product Selection: Make data-driven decisions about which products to sell based on demand, competition, and profitability metrics

- Inventory Management: Monitor stock levels and availability patterns to optimize your own inventory

However, extracting this data manually is impractical at scale. Amazon’s website structure is complex, frequently changes, and implements sophisticated anti-bot measures. This is where Pangolin’s Amazon Scraping API becomes invaluable.

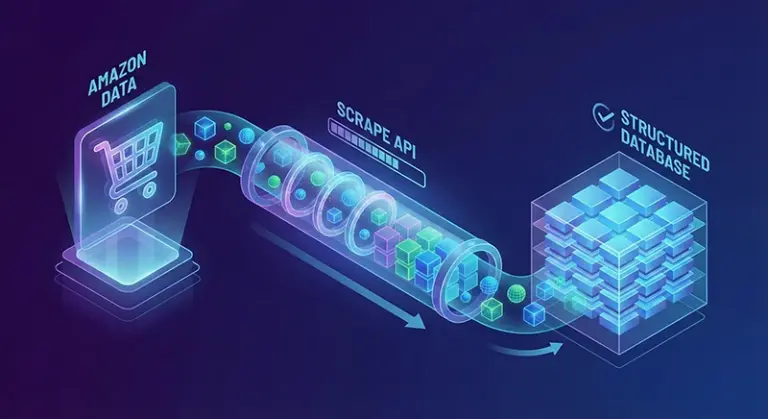

Understanding Pangolin’s Amazon Scraping API

Pangolin’s Amazon Scraping API is a professional-grade solution designed specifically for Amazon data extraction. Unlike basic web scrapers, it handles all the complexities of Amazon’s infrastructure:

Key Features

- 99.9% Success Rate: Advanced anti-detection technology ensures reliable data extraction

- Multi-Marketplace Support: Extract data from Amazon.com, Amazon.co.uk, Amazon.de, and 15+ other marketplaces

- Comprehensive Data Fields: Access product details, pricing, reviews, ratings, images, variants, and more

- Real-time Data: Get fresh, up-to-date information with sub-second response times

- Scalable Infrastructure: Handle millions of requests with enterprise-grade reliability

Getting Started: Prerequisites

Before diving into code, you’ll need:

- Pangolin API Account: Sign up at tool.pangolinfo.com to get your API credentials

- API Key: Obtain your authentication key from the dashboard (you’ll get 1,000 free credits to start)

- Development Environment: Python 3.7+, Node.js 14+, or any language that can make HTTP requests

- Basic Programming Knowledge: Familiarity with REST APIs and JSON data structures

Authentication and API Basics

Pangolin’s API uses Bearer token authentication. Every request must include your API key in the Authorization header. Here’s the basic structure:

curl -X POST "https://scrapeapi.pangolinfo.com/api/v1/scrape" \

-H "Authorization: Bearer YOUR_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"url": "https://www.amazon.com/dp/PRODUCT_ASIN",

"parserName": "amzProductDetail",

"format": "json",

"bizContext": {

"zipcode": "10041"

}

}'Security Best Practice

Never hardcode your API key in client-side code or commit it to version control. Use environment variables or secure key management systems.

Extracting Product Data: Step-by-Step Guide

1. Basic Product Information Extraction

Let’s start with extracting basic product information. The most common use case is fetching data from a product detail page using the ASIN (Amazon Standard Identification Number).

Python Example:

import requests

import json

# Your Pangolin API credentials

API_KEY = "your_api_key_here"

API_ENDPOINT = "https://scrapeapi.pangolinfo.com/api/v1/scrape"

# Product ASIN you want to scrape

product_asin = "B0DYTF8L2W"

amazon_url = f"https://www.amazon.com/dp/{product_asin}"

# Request headers

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

# Request payload

payload = {

"url": amazon_url,

"parserName": "amzProductDetail",

"format": "json",

"bizContext": {

"zipcode": "10041" # US zipcode (required for Amazon)

}

}

# Make the API request

response = requests.post(API_ENDPOINT, headers=headers, json=payload)

# Check if request was successful

if response.status_code == 200:

result = response.json()

# Extract product information from the response structure

if result.get('code') == 0:

data = result.get('data', {})

json_data = data.get('json', [{}])[0]

if json_data.get('code') == 0:

product_results = json_data.get('data', {}).get('results', [])

if product_results:

product = product_results[0]

print(f"Product Title: {product.get('title')}")

print(f"Price: {product.get('price')}")

print(f"Rating: {product.get('star')} stars")

print(f"Number of Reviews: {product.get('rating')}")

print(f"Brand: {product.get('brand')}")

print(f"Sales: {product.get('sales')}")

# Save to file

with open(f'product_{product_asin}.json', 'w') as f:

json.dump(product, f, indent=2)

else:

print("No product data found")

else:

print(f"Parser error: {json_data.get('message')}")

else:

print(f"API error: {result.get('message')}")

else:

print(f"HTTP Error: {response.status_code}")

print(response.text)2. Understanding the Response Structure

When you set format: "json", Pangolin returns structured JSON data with the following structure:

{

"code": 0,

"message": "ok",

"data": {

"json": [

{

"code": 0,

"data": {

"results": [

{

"asin": "B0DYTF8L2W",

"title": "Sweetcrispy Convertible Sectional Sofa Couch...",

"price": "$599.99",

"star": "4.4",

"rating": "22",

"image": "https://m.media-amazon.com/images/I/...",

"images": ["https://...", "..."],

"brand": "Sweetcrispy",

"description": "Product description...",

"sales": "50+ bought in past month",

"seller": "Amazon.com",

"shipper": "Amazon",

"merchant_id": "null",

"color": "Beige",

"size": "126.77\"W",

"has_cart": false,

"otherAsins": ["B0DYTF8XXX"],

"coupon": "null",

"category_id": "3733551",

"category_name": "Sofas & Couches",

"product_dims": "20.07\"D x 126.77\"W x 24.01\"H",

"pkg_dims": "20.07\"D x 126.77\"W x 24.01\"H",

"product_weight": "47.4 Pounds",

"reviews": {...},

"customerReviews": "...",

"first_date": "2024-01-15",

"deliveryTime": "Dec 15 - Dec 18",

"additional_details": false

}

]

},

"message": "ok"

}

],

"url": "https://www.amazon.com/dp/B0DYTF8L2W",

"taskId": "45403c7fd7c148f280d0f4f7284bc9e9"

}

}3. Building a Price Monitoring System

Price monitoring is one of the most valuable applications of Amazon data extraction. Here’s a complete example:

import time

from datetime import datetime

import sqlite3

class AmazonPriceTracker:

def __init__(self, api_key, db_path='price_history.db'):

self.api_key = api_key

self.db_path = db_path

self.setup_database()

def setup_database(self):

"""Create database table for price history"""

conn = sqlite3.connect(self.db_path)

cursor = conn.cursor()

cursor.execute('''

CREATE TABLE IF NOT EXISTS price_history (

id INTEGER PRIMARY KEY AUTOINCREMENT,

asin TEXT NOT NULL,

title TEXT,

price TEXT,

timestamp DATETIME DEFAULT CURRENT_TIMESTAMP

)

''')

conn.commit()

conn.close()

def track_price(self, asin):

"""Fetch current price and save to database"""

url = f"https://www.amazon.com/dp/{asin}"

payload = {

"url": url,

"parserName": "amzProductDetail",

"format": "json",

"bizContext": {"zipcode": "10041"}

}

headers = {

"Authorization": f"Bearer {self.api_key}",

"Content-Type": "application/json"

}

response = requests.post(API_ENDPOINT, headers=headers, json=payload)

if response.status_code == 200:

data = response.json()

product = data.get('data', {}).get('json', [{}])[0].get('data', {}).get('results', [{}])[0]

# Save to database

conn = sqlite3.connect(self.db_path)

cursor = conn.cursor()

cursor.execute('''

INSERT INTO price_history (asin, title, price)

VALUES (?, ?, ?)

''', (asin, product.get('title'), product.get('price')))

conn.commit()

conn.close()

return product

return None

# Usage

tracker = AmazonPriceTracker(API_KEY)

product = tracker.track_price('B08N5WRWNW')

print(f"Tracked: {product.get('title')} - {product.get('price')}")Best Practices and Optimization

Rate Limiting and Error Handling

Implementing proper rate limiting and error handling ensures reliable, long-term operation:

import time

from functools import wraps

def rate_limit(calls_per_second=10):

"""Decorator to rate limit API calls"""

min_interval = 1.0 / calls_per_second

last_called = [0.0]

def decorator(func):

@wraps(func)

def wrapper(*args, **kwargs):

elapsed = time.time() - last_called[0]

left_to_wait = min_interval - elapsed

if left_to_wait > 0:

time.sleep(left_to_wait)

ret = func(*args, **kwargs)

last_called[0] = time.time()

return ret

return wrapper

return decorator

@rate_limit(calls_per_second=5)

def scrape_with_safety(asin):

"""Scrape with rate limiting"""

# Your scraping code here

passConclusion

Amazon product data extraction is a powerful capability that can transform your e-commerce business strategy. With Pangolin’s Amazon Scraping API, you have access to enterprise-grade infrastructure that handles all the complexities of data extraction, allowing you to focus on deriving insights and making data-driven decisions.

Next Steps

- Sign up for Pangolin: Get your free API key at tool.pangolinfo.com

- Explore the Documentation: Visit docs.pangolinfo.com for complete API reference

- Test in the Playground: Try the interactive API Playground

- Join the Community: Connect with other developers and share your use cases