In today’s fiercely competitive e-commerce landscape, data serves as the lifeblood of successful businesses. Every thriving Amazon seller understands that accurate and timely market intelligence can determine product selection success, pricing strategies, and ultimately the fate of their entire operation. However, with Amazon’s increasingly stringent data access restrictions and sophisticated anti-scraping mechanisms, traditional data acquisition methods have become inadequate for modern e-commerce demands.

This comprehensive guide will introduce you to Pangolin Scrape API, a revolutionary Amazon data collection solution that enables you to master the complete workflow from API registration to data acquisition in just 5 minutes. Whether you’re a technical novice or an experienced developer, you’ll gain practical operational guidance and best practice recommendations that can be immediately implemented.

The Reality of Amazon Data Collection Challenges: Why Traditional Methods Are Obsolete

Many e-commerce sellers have encountered similar frustrations during their data acquisition journey. Traditional web scraping technologies face unprecedented challenges as Amazon’s anti-scraping mechanisms become increasingly intelligent, employing IP blocking, CAPTCHA verification, dynamic content loading, and other sophisticated techniques that make self-built scraping systems extremely complex and unstable.

The maintenance cost issue proves even more troublesome. What appears to be a simple scraping program often requires dedicated technical teams for continuous maintenance, handling various unexpected technical issues. When Amazon updates page structures or adjusts anti-scraping strategies, entire data collection systems can collapse instantly, causing significant business losses.

While third-party data tools reduce technical barriers, they commonly suffer from delayed data updates, limited coverage, and low customization capabilities. For enterprises requiring large-scale, real-time, personalized data analysis, these tools clearly cannot meet their needs.

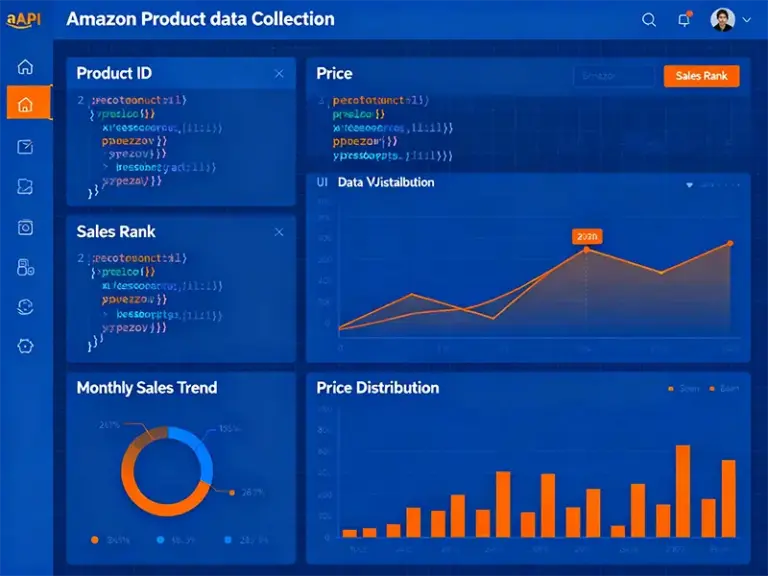

Pangolin Scrape API: Redefining Amazon Data Collection Standards

The emergence of Pangolin Scrape API has completely transformed this landscape. As a professional e-commerce data collection API service provider, the Pangolin team has cultivated deep expertise in Amazon data scraping for years, accumulating rich technical experience and industry insights.

The API’s core advantages manifest across multiple dimensions. First is the comprehensiveness of data coverage, supporting full-spectrum information acquisition including Amazon product details, search results, ranking lists, review data, and price history. Second is the advanced technical architecture, utilizing distributed cloud deployment to ensure high-concurrency processing capabilities and 99.9% service availability.

More importantly, Pangolin Scrape API provides both synchronous and asynchronous calling modes, perfectly adapting to different business scenarios. Synchronous API suits real-time query needs with fast response times, ideal for single or small-batch data acquisition. Asynchronous API is specifically designed for large-scale data collection, supporting batch processing and efficiently handling thousands of data requests.

5-Minute Quick Setup Guide: From Zero to Data Acquisition

Step 1: Register Account and Obtain API Key (1 minute)

Visit the Pangolin official website (www.pangolinfo.com), click the registration button to create your account. After completing email verification, enter the console page and generate your exclusive API key in the API management area. New users typically receive a certain amount of free testing quota, sufficient for completing initial functionality verification.

Step 2: Environment Preparation and Dependency Installation (1 minute)

Ensure your development environment has Python 3.6 or higher installed. Install necessary dependency packages via pip:

pip install requests jsonStep 3: Synchronous API Integration Example (2 minutes)

Here’s a complete code example for using Pangolin Scrape API to retrieve Amazon product data:

import requests

import json

class PangolinAPIClient:

def __init__(self, api_key):

self.api_key = api_key

self.base_url = "https://api.pangolinfo.com/v1"

self.headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

def get_product_data(self, asin, marketplace="US"):

"""Retrieve Amazon product detail data"""

endpoint = f"{self.base_url}/amazon/product"

payload = {

"asin": asin,

"marketplace": marketplace,

"include_reviews": True,

"include_variants": True

}

try:

response = requests.post(endpoint,

headers=self.headers,

json=payload,

timeout=30)

response.raise_for_status()

return response.json()

except requests.exceptions.RequestException as e:

print(f"API request failed: {e}")

return None

def search_products(self, keyword, page=1):

"""Search Amazon products"""

endpoint = f"{self.base_url}/amazon/search"

payload = {

"keyword": keyword,

"page": page,

"marketplace": "US",

"include_sponsored": True

}

try:

response = requests.post(endpoint,

headers=self.headers,

json=payload,

timeout=30)

response.raise_for_status()

return response.json()

except requests.exceptions.RequestException as e:

print(f"Search request failed: {e}")

return None

# Usage example

if __name__ == "__main__":

# Replace with your API key

api_key = "your_api_key_here"

client = PangolinAPIClient(api_key)

# Get specific product data

product_data = client.get_product_data("B08N5WRWNW")

if product_data:

print("Product title:", product_data.get("title"))

print("Current price:", product_data.get("price"))

print("Rating:", product_data.get("rating"))

# Search products

search_results = client.search_products("wireless headphones")

if search_results:

print(f"Found {len(search_results.get('products', []))} products")

for product in search_results.get('products', [])[:3]:

print(f"- {product.get('title')} - ${product.get('price')}")

Step 4: Asynchronous API Integration Example (1 minute)

For large-scale data collection requirements, asynchronous API is the preferred choice:

import asyncio

import aiohttp

import json

class AsyncPangolinClient:

def __init__(self, api_key):

self.api_key = api_key

self.base_url = "https://api.pangolinfo.com/v1"

self.headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

async def batch_get_products(self, asin_list):

"""Batch retrieve product data"""

async with aiohttp.ClientSession() as session:

tasks = []

for asin in asin_list:

task = self.get_single_product(session, asin)

tasks.append(task)

results = await asyncio.gather(*tasks, return_exceptions=True)

return results

async def get_single_product(self, session, asin):

"""Get single product data"""

endpoint = f"{self.base_url}/amazon/product"

payload = {

"asin": asin,

"marketplace": "US"

}

try:

async with session.post(endpoint,

headers=self.headers,

json=payload) as response:

response.raise_for_status()

return await response.json()

except Exception as e:

return {"error": str(e), "asin": asin}

# Batch processing example

async def main():

api_key = "your_api_key_here"

client = AsyncPangolinClient(api_key)

# Batch retrieve multiple product data

asin_list = ["B08N5WRWNW", "B07Q9MJKBV", "B08GKQHKR8"]

results = await client.batch_get_products(asin_list)

for result in results:

if isinstance(result, dict) and "error" not in result:

print(f"Product: {result.get('title')} - Price: {result.get('price')}")

else:

print(f"Retrieval failed: {result}")

# Run asynchronous task

if __name__ == "__main__":

asyncio.run(main())

Advanced Applications: Optimal Selection Strategy for Sync vs Async APIs

Choosing between synchronous and asynchronous APIs depends on your specific business scenarios. Synchronous API suits real-time query scenarios, such as users querying specific product information on frontend pages requiring immediate results. This mode’s advantage lies in its simplicity and directness, with clear code logic suitable for rapid prototyping and small-scale applications.

Asynchronous API represents the preferred solution for large-scale data collection. When you need to simultaneously process hundreds or even thousands of product data points, asynchronous mode can significantly improve processing efficiency and reduce overall execution time. It’s particularly suitable for scheduled tasks, batch data updates, and competitor monitoring scenarios.

In practical applications, many enterprises adopt hybrid strategies: using synchronous API for handling real-time user requests while employing asynchronous API for background data updates and analysis. This architecture ensures both user experience and data processing efficiency.

Data Processing and Analysis: From Raw Data to Business Insights

Data acquisition represents only the first step; transforming raw data into valuable business insights proves crucial. Pangolin Scrape API returns clearly structured data containing comprehensive product dimension information, providing a rich data foundation for subsequent analysis.

In product selection analysis, you can combine multiple dimensions including price trends, sales rankings, and review sentiment analysis to construct comprehensive evaluation models. Through comparative analysis of different products’ market performance, you can identify potential niche markets and product opportunities.

Competitor monitoring represents another important application scenario. By regularly collecting competitor product data, you can stay informed about market dynamics and adjust your pricing and marketing strategies accordingly. Combined with historical data analysis, you can also predict market trends and position yourself ahead of new business opportunities.

Best Practices and Important Considerations

Following best practices during Pangolin Scrape API usage can help you achieve better user experience. First, reasonably control request frequency; while the API supports high-concurrency access, we recommend planning request volumes according to actual needs to avoid unnecessary resource waste.

Data caching strategy also proves important. For data with low change frequency, such as basic product information, you can set appropriate cache times to reduce duplicate requests. For data requiring high real-time accuracy, such as prices and inventory, more frequent updates are necessary.

Error handling and retry mechanisms are key to ensuring system stability. In complex network environments, occasional request failures are normal phenomena. We recommend implementing exponential backoff retry strategies that automatically retry when encountering temporary errors, improving data acquisition success rates.

Data security and compliant usage cannot be overlooked. Ensure secure storage of API keys and avoid hardcoding sensitive information in code. Simultaneously, comply with relevant laws, regulations, and platform policies, use acquired data reasonably, and avoid infringing on others’ rights.

Cost-Benefit Analysis: Why Choose Pangolin Scrape API?

From a cost-benefit perspective, Pangolin Scrape API offers clear advantages compared to self-built scraping systems. Self-built systems require substantial investment in development time, server resources, and maintenance costs, while facing technical and compliance risks.

Using professional API services allows you to focus more energy on core business logic and data analysis rather than struggling with underlying technical implementation. This professional division of labor not only improves efficiency but also reduces overall technical risks.

More importantly, the Pangolin team continuously invests in research and development, constantly optimizing API performance and functionality to ensure services maintain industry-leading standards. This continuous technical investment is difficult for individual enterprises to sustain; choosing professional service providers allows you to always enjoy the latest technological achievements.

Future Outlook: The New Era of Data-Driven E-commerce

With the rapid development of artificial intelligence and big data technologies, data-driven business decision-making has become standard practice in the e-commerce industry. Pangolin Scrape API, as an important driver of this trend, will continue investing resources in technological innovation and service optimization.

Future API services will become more intelligent, providing not only raw data but also integrating more analytical functions and predictive capabilities. Through machine learning algorithms, APIs will automatically identify market trends, predict price changes, and recommend potential products, providing users with higher-value data services.

Cross-platform data integration also represents a development direction. Beyond Amazon, Pangolin supports data collection from multiple e-commerce platforms including Walmart, eBay, and Shopify, helping users build comprehensive market insight systems.

Start Your Data-Driven Journey Today

In this data-centric era, mastering efficient data acquisition capabilities means gaining competitive advantages. Pangolin Scrape API provides you with a powerful yet user-friendly tool that makes complex data collection simple and efficient.

Whether you’re a startup e-commerce entrepreneur or a traditional enterprise seeking digital transformation, now is the optimal time to begin data-driven decision-making. Through the 5-minute quick setup guide provided in this article, you can immediately start experiencing professional-grade data collection services.

Visit www.pangolinfo.com, register your account, obtain your API key, and embark on your data-driven journey. In this opportunity-rich e-commerce market, let data become your most reliable business partner, helping you stand out in competition and achieve sustained business growth and success.