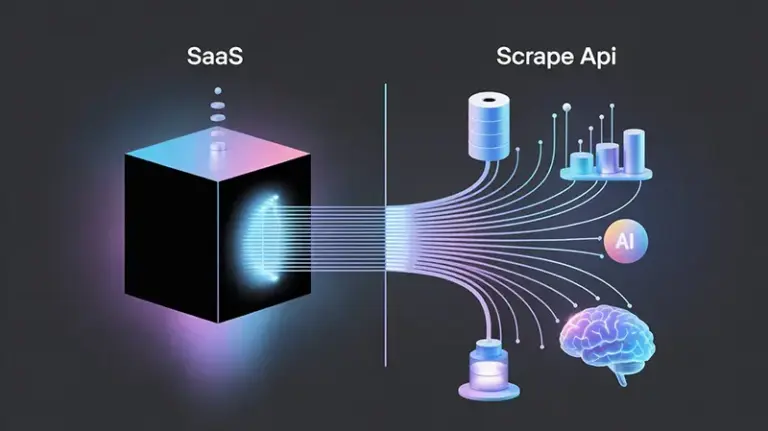

E-commerce Data collection scrape api becomes essential when your team needs to process hundreds of thousands of Amazon product data points daily, when existing SaaS tools fail at critical moments, and when your custom data collection needs far exceed the capabilities of standardized products—you may have reached an important technical decision point.

This isn’t a simple discussion about whether one tool is better than another. It’s a deeper consideration of business growth stages, technology architecture choices, and cost-effectiveness optimization. Let’s start with a real-world scenario:

Imagine you are the head of technology for a cross-border e-commerce company with an annual turnover exceeding a hundred million dollars. Your product selection team needs to analyze data changes for tens of thousands of ASINs every day. Your operations team needs to monitor sponsored ad placements across dozens of categories. Your data analysts need to adjust keyword strategies based on real-time search engine results page (SERP) data. At this point, the seller tools or other SaaS solutions you use start to feel inadequate—not because they lack features, but because they lack flexibility, their costs are prohibitively high, and their data timeliness can’t keep up with the pace of your business.

The “Growth Ceiling” of Traditional SaaS Tools

The Personalization Dilemma of Standardized Products

Most e-commerce SaaS tools follow the same product logic: to serve the largest common denominator of users, they must standardize their features. This standardization can indeed solve problems quickly in the early stages of a business, but issues emerge as your business complexity grows.

Taking Amazon data scraping as an example, standard SaaS tools typically only offer basic product information scraping. However, your business might require:

- Search results data for specific zip codes, as consumer behavior varies dramatically by region.

- Precise identification of sponsored ad slots, as this directly impacts your PPC strategy.

- The full content of “customer says” in product reviews, as this is the most authentic consumer feedback.

- Hourly data updates, because your pricing strategy needs to respond to market changes in real time.

These requirements either don’t exist in the standard packages of SaaS tools or come with hefty customization fees. More critically, even if you are willing to pay, the SaaS vendor may not have the incentive to perform deep customizations for a single client.

The Growth Bottleneck of Scrape API Call Limits

Almost all SaaS tools impose limits on Scrape API calls. The logic behind this is understandable—they need to control costs and ensure service stability. But for a rapidly growing e-commerce business, this limitation often becomes the biggest obstacle.

When your business scales from processing thousands of data points per day to millions, the pricing models of SaaS tools often grow exponentially. Worse, during peak business periods (like Black Friday or Cyber Monday), when you need vast amounts of data to support decisions the most, the Scrape API limits become a business bottleneck.

This is when you begin to realize a fundamental problem: the business model of a SaaS tool prevents it from truly keeping pace with your business growth.

The Lack of Data Ownership and Control

Using a SaaS tool means your core business data flows through a third-party system. This not only poses data security risks but, more importantly, you lose complete control over the data processing workflow.

When you need to perform special cleaning, transformation, or deep integration of data with your internal systems, the “black box” nature of SaaS tools ties your hands. You can’t know how the data was collected, you can’t control the timing and frequency of collection, and you certainly can’t optimize the collection process based on your business logic.

This lack of control might not be an issue when the business is simple, but it becomes a fatal flaw when your data strategy is a core competitive advantage.

An In-depth Analysis of the Technical Advantages of an E-commerce Data Collection Scrape API

Architectural Flexibility: Building a Data Flow That Matches Business Logic

Choosing an e-commerce data collection Scrape API over a SaaS tool is, in essence, choosing architectural autonomy. This means you can design your data collection and processing workflows according to your actual business needs, rather than passively adapting to a standardized product.

Let’s take a typical product selection process as an example:

Python

# Example of product selection data collection using the Pangolin Scrape API

import requests

import json

from datetime import datetime

# Step 1: Get search results for a target category

def get_category_products(category, price_range, zip_code="10001"):

url = "https://api.pangolinfo.com/amazon/search"

payload = {

"query": category,

"country": "US",

"zip_code": zip_code, # Specify zip code for collection

"price_min": price_range[0],

"price_max": price_range[1],

"ad_only": False, # Include sponsored ads

"format": "structured"

}

response = requests.post(url, json=payload, headers=headers)

return response.json()

# Step 2: Batch get ASIN details and review analysis

def batch_get_asin_details(asin_list):

url = "https://api.pangolinfo.com/amazon/product/batch"

payload = {

"asins": asin_list,

"include_reviews": True,

"include_customer_says": True, # Get full "customer says" data

"include_sponsored_info": True

}

response = requests.post(url, json=payload, headers=headers)

return response.json()

# Step 3: Integrate data and apply a custom selection algorithm

def apply_selection_algorithm(products_data):

selected_products = []

for product in products_data:

# Custom selection logic

if (product['review_count'] > 100 and

product['rating'] > 4.0 and

'positive' in product['customer_says']['sentiment']):

selected_products.append(product)

return selected_products

This Scrape API-driven architecture allows you to:

- Precisely control the scope of data collection: You can perform targeted collection based on specific zip codes, price ranges, and product attributes.

- Implement complex business logic: Tightly integrate data collection with product selection algorithms, pricing strategies, inventory management, and other business logic.

- Support real-time decisions: The low-latency nature of a Scrape API supports real-time business decision-making.

- Scale concurrent processing: Dynamically adjust the scale of concurrent collection based on business needs.

Technical Guarantees for Data Integrity and Accuracy

A professional e-commerce data collection Scrape API typically far exceeds SaaS tools in data quality, especially in scenarios with high technical barriers.

Take the collection of Amazon sponsored ad slots, for instance. This is a highly technical field. Amazon’s sponsored ads use complex algorithms for display, with logic that includes user behavior, time factors, geographic location, search history, and multiple other dimensions. Ordinary collection tools struggle to achieve a 98% collection rate for sponsored ad slots because it requires:

- Deep anti-scraping technology: Understanding Amazon’s anti-scraping mechanisms and devising targeted evasions.

- Intelligent request strategies: Simulating real user Browse behavior, including mouse movements and page dwell times.

- Dynamic parameter adjustment: Adjusting collection parameters based on different search keywords, categories, and time periods.

- Real-time error handling: The ability to automatically switch collection strategies when data anomalies are detected.

Professional Scrape API services like Pangolin, through long-term technical accumulation and continuous optimization, can achieve a 98% accuracy rate in sponsored ad slot collection—a feat that the vast majority of SaaS tools cannot match.

The Scale Advantage of Cost-Effectiveness

Although the initial cost of using a Scrape API may seem higher than a SaaS tool, a Scrape API generally offers better cost-effectiveness from a long-term and large-scale perspective.

This cost advantage is mainly reflected in several areas:

- Decreasing Marginal Costs: The marginal cost of a Scrape API service usually decreases as usage increases, whereas SaaS tools often use tiered pricing, leading to jump-like cost increases.

- Resource Utilization Efficiency: With a Scrape API, you can precisely control resource usage based on actual business needs, avoiding payment for unnecessary features.

- Avoiding Redundant Investments: A one-time Scrape API integration can serve multiple business scenarios, whereas SaaS tools often require separate payments for different functional modules.

What Kind of Team Should Choose an E-commerce Data Collection Scrape API?

Considerations of Technical Maturity

Choosing a Scrape API over a SaaS tool first requires considering the team’s technical maturity. This means more than just having programmers; it’s about:

- Data architecture design capability: The ability to design a data collection, storage, and processing architecture that supports business needs.

- System integration experience: The ability to deeply integrate the Scrape API service with existing business systems.

- Operations and maintenance capability: The ability to ensure the stability and maintainability of the system built upon the Scrape API.

Generally, teams with the following characteristics are better suited for a Scrape API solution:

- A dedicated technical team, including backend developers and operations staff.

- A clear data-driven business process and decision-making system.

- Customized data collection needs that cannot be met by standardized products.

- A business scale that has reached a certain level, with high requirements for both cost and efficiency.

Analysis of Business Scenario Suitability

Different business scenarios have vastly different data collection needs, and a Scrape API solution holds a decisive advantage in certain contexts:

- Large-scale Product Selection: When you need to analyze tens of thousands of product data points daily, the batch processing capability and cost advantages of a Scrape API are significant.

- Real-time Pricing Strategy: When your pricing needs to be adjusted based on real-time market data, the low-latency feature of a Scrape API is irreplaceable.

- Multi-platform Data Integration: When you need to collect data from multiple platforms like Amazon, eBay, and Shopify simultaneously, a unified Scrape API interface can greatly simplify integration complexity.

- AI and Machine Learning Applications: When you need to build AI models based on e-commerce data, the raw data and flexibility provided by a Scrape API are essential.

Best Practices for Implementing an E-commerce Data Collection Scrape API

A Phased Migration Strategy

Migrating from a SaaS tool to a Scrape API solution should not be done all at once. A more reasonable approach is to adopt a phased migration strategy:

Phase 1: Parallel Validation

While keeping the existing SaaS tool running, start integrating the Scrape API in a relatively simple business scenario. The goal of this phase is to validate the Scrape API’s data quality and technical feasibility.

Python

# Parallel validation example: Using both SaaS tool and Scrape API to compare data

def validate_api_quality(asin_list):

# Get data from the Scrape API

api_data = get_products_from_api(asin_list)

# Get data from the existing SaaS tool (assuming an interface exists)

saas_data = get_products_from_saas(asin_list)

# Compare data quality

quality_report = compare_data_quality(api_data, saas_data)

return quality_report

Phase 2: Core Function Migration

After validating the Scrape API’s reliability, begin migrating the core functions that have the greatest impact on the business. It is usually recommended to start with the functions that have the largest data volume and the highest timeliness requirements.

Phase 3: Deep Optimization

Once the basic migration is complete, start leveraging the advantages of the Scrape API for deep optimization, including custom data processing logic and implementing features not supported by the SaaS tool.

Data Quality Assurance System

Using a Scrape API means you need to establish your own data quality assurance system:

- Data Integrity Monitoring: Set up monitoring mechanisms to ensure the integrity of key data fields.

- Anomalous Data Detection: Define rules to identify abnormal data, such as sudden price spikes or unusual review counts.

- Data Consistency Validation: For critical business data, use multiple methods for cross-validation.

Cost Control and Performance Optimization

The flexibility of Scrape API usage also means you need more refined cost control:

- Intelligent Caching Strategy: Implement caching for data that changes infrequently to reduce unnecessary Scrape API calls.

- Request Consolidation Optimization: Reduce the number of Scrape API calls through batch requests.

- Off-peak Collection: Schedule data collection times based on business needs and Scrape API pricing strategies.

Pangolin Scrape API: A Professional-Grade E-commerce Data Collection Solution

When choosing an e-commerce data collection Scrape API, Pangolin offers a professional solution worth deep consideration. As a tech vendor specializing in e-commerce data collection, Pangolin’s product design fully considers the practical needs of large-scale, high-quality data collection.

Technical Depth Advantage

Pangolin’s advantages in certain technical details directly address the pain points of traditional tools mentioned earlier:

- Precise Collection of Sponsored Ad Slots: A 98% collection rate means your PPC analysis and competitive intelligence gathering will be more accurate. In today’s increasingly fierce Amazon advertising competition, this precision can directly impact your ad spend ROI.

- Zip Code-Specific Collection Capability: Search results can vary by as much as 30% between different zip codes, especially in price-sensitive categories. This precise geolocation capability makes your market analysis more accurate.

- Complete Review Data Collection: After Amazon closed some of its review data interfaces, services that can completely collect “customer says” data have become scarce. This data is invaluable for product optimization and marketing strategy formulation.

Large-scale Processing Capability

For large e-commerce teams processing millions of data points daily, the tens of millions of pages per day processing capacity offered by Pangolin ensures that no technical bottlenecks will be encountered, even during rapid business growth. Behind this scaling capability are:

- A distributed collection architecture that can automatically dispatch and load-balance.

- Intelligent anti-scraping strategies that ensure a high success rate for concurrent collection.

- Flexible data output formats, including raw HTML, Markdown, and structured JSON.

Cost-Effectiveness Advantage

Compared to building an in-house scraper team, the cost advantage of the Pangolin Scrape API is mainly reflected in:

- Lowered Technical Barrier: No need to invest heavily in R&D and maintenance for anti-scraping technology.

- Infrastructure Cost Savings: No need to invest in dedicated server clusters and network resources for collection.

- Time Cost Advantage: Can be launched quickly without the need for long-term technical accumulation.

Decision Framework: How to Make the Right Choice

Business Maturity Assessment

When choosing between a SaaS tool and a Scrape API, you first need to honestly assess your business maturity:

- Degree of Data-Drivenness: To what extent do your business decisions depend on data? If data is merely an aid to decision-making, a SaaS tool may suffice. But if data is a core competitive advantage, the flexibility of a Scrape API becomes crucial.

- Intensity of Customization Needs: How many of your data needs cannot be met by standardized products? If over 30% of your needs require customization, the ROI of a Scrape API solution will be high.

- Technical Team Capability: Does your team have the ability to integrate and maintain a Scrape API? This includes not only development capabilities but also operations and troubleshooting.

ROI Calculation Model

Establish a clear ROI calculation model to evaluate the two options:

- SaaS Tool Total Cost = Subscription Fees + Overage Charges + Opportunity Cost from Missing Features

- Scrape API Solution Total Cost = Scrape API Usage Fees + Development & Integration Costs + Maintenance Costs – Gains from Efficiency Improvements

Typically, once the business reaches a certain scale (e.g., processing over a million data points per month), the ROI of a Scrape API solution will significantly outperform that of a SaaS tool.

Risk Assessment and Mitigation Strategy

Any technical decision involves risks; the key is how to assess and mitigate them:

- Technical Risk: A Scrape API solution requires more technical investment and may face issues like high integration complexity and increased maintenance costs. Mitigation strategies include choosing a Scrape API provider with comprehensive technical documentation and good technical support, as well as establishing thorough testing and monitoring systems.

- Vendor Risk: Over-reliance on a single Scrape API provider can pose business risks. Mitigation strategies include choosing a stable and reliable provider and maintaining a degree of technical flexibility to switch if necessary.

- Compliance Risk: Compliance issues related to data collection need to be handled with care. Ensure the chosen Scrape API provider has the necessary compliance capabilities.

Future Trends: The Technical Evolution of E-commerce Data Collection

AI-Driven Intelligent Collection

With the development of AI technology, e-commerce data collection is evolving towards intelligence. Future Scrape API services will feature:

- Intelligent Data Recognition: Automatically identify and extract key information from pages without pre-set parsing rules.

- Dynamic Strategy Adjustment: Automatically adjust collection strategies based on changes to the target website.

- Semantic Understanding: Not only collect structured data but also understand the semantic information of text content.

Real-time Data Stream Processing

The traditional batch data collection model is shifting towards a real-time stream processing model:

- Streaming Scrape APIs: Provide a continuous data stream rather than one-off batches.

- Event-Driven Collection: Trigger data collection based on specific events (e.g., price changes, inventory updates).

- Edge Computing Integration: Perform initial data processing and analysis at the point of data collection.

Privacy Protection and Compliance

Increasingly stringent data privacy protection requirements will drive the compliance construction of Scrape API services:

- Data Anonymization Technology: Automatically anonymize sensitive information during the collection process.

- Differential Privacy Protection: Protect individual privacy while ensuring data usability.

- Compliance Certifications: Scrape API providers will need to obtain relevant data processing compliance certifications.

Conclusion: Building a Future-Oriented Data Collection Architecture

Returning to the question at the beginning of the article: why are more and more tech teams choosing an e-commerce data collection Scrape API over SaaS tools?

The answer is not that a Scrape API is superior to SaaS tools in every aspect, but that after a business develops to a certain stage, the flexibility, control, and cost-effectiveness of a Scrape API begin to show clear advantages. This choice essentially reflects a company’s different understanding and positioning of its data strategy.

By choosing a SaaS tool, you opt for convenience and a quick start, suitable for teams in the exploration phase or with relatively standard data needs. By choosing a Scrape API, you opt for architectural autonomy and limitless expansion possibilities, suitable for mature teams that view data as a core competitive advantage.

For most e-commerce companies of a certain scale, the migration from a SaaS tool to a Scrape API solution is an almost inevitable trend. The key is not if you should migrate, but when and how.

In this process, choosing the right Scrape API provider is crucial. A professional vendor like Pangolin, which specializes in e-commerce data collection and has deep technical expertise, can allow you to enjoy the flexibility of a Scrape API while avoiding the high investment and long timelines of building an in-house team.

Ultimately, regardless of the solution you choose, remember this: technology serves the business, and data drives decisions. The best architecture is not the most advanced one, but the one that best suits your current business needs and team capabilities. In this data-driven e-commerce era, making the right technical choice may be the key factor that sets you apart from the competition.

When your team is ready to take on bigger challenges, when your business needs more granular data support, and when you want to build a truly data-driven decision-making system—perhaps it’s time to consider migrating from a SaaS tool to a professional e-commerce data collection Scrape API. This is not just a technical upgrade; it’s a strategic transformation.