Deep Dive into SP Ads Collection Technology (2025-11-12)

Author / Brand: Pangolin | Topic: Amazon Sponsored Products Ads Data Collection

1. Problem Background: Why is Amazon SP Ads Collection so Hard?

Within Amazon’s ad ecosystem, Sponsored Products (SP ads) carry significant business value. They directly align with search intent, affect exposure and conversion, and are core data for bidding strategies and competitive intelligence. However, collecting SP ads is not just “grabbing a page’s HTML.” Challenges include:

- Extreme dynamism: The same keyword can show different ad slots depending on time window, geo, user persona, device, and viewport.

- Async loading and delayed rendering: Ad modules often inject after the main content, with jittery timing—“too early” causes misses, “too late” causes timeouts.

- Cross-language and cross-site variance: Labels, DOM structures, and ARIA attributes differ across locales (.com/.co.uk/.de) and languages.

- Anti-bot and risk control: Frequency throttling, IP reputation, fingerprinting, bot detection, CAPTCHA, and behavioral anomaly blocking make scaled collection hard to keep stable.

In essence, SP ads collection is an engineering problem of operating against a highly dynamic system: controlling environment variables (geo, time, persona), adapting to rendering timing (wait strategies), and ensuring requests “survive” risk control long-term.

2. Technical Challenges: Amazon’s “Black-Box” Algorithms

SP ad display logic is driven by undisclosed bidding and ranking algorithms—a black box:

- Bidding and delivery decisions: Whether an ad shows, to whom, and at which position depends on real-time bidding, relevance scoring, budget status, and frequency controls.

- Personalization and context: History, recent browsing, and shopping preferences may affect sponsored injection and ranking.

- Micro changes in content/layout: Templates, DOM identifiers, ARIA attributes, and label wording change frequently, breaking parsers.

- Risk control and adversarial dynamics: The black box also sets thresholds and blocking strategies, affecting collection windows and retry logic.

Therefore, collection isn’t a one-off task; it’s a long-term engineering process around a shifting black box. Only a feedback loop (collect → validate → fix → re-verify) sustains high-quality outputs amid change.

3. Solution: Our Technical Path (Partially Disclosed)

Key engineering ideas Pangolin uses for SP ads collection (partially disclosed):

3.1 Multi-layer Anti-detection and Real Persona Simulation

- Fingerprint/persona strategy: Dynamic UA, locales, timezone, window size, plugin footprints, and input traces to simulate real users.

- Proxy orchestration: High-quality IP pools, circuit breaker, rate control, and partition isolation to reduce risk triggers.

- Interaction/wait strategy: Event/metric-based “ready” checks, avoiding simple fixed delays; “readiness signals” for ad modules.

3.2 Robust Sponsored Slot Detection

Cross-language/template sponsored detection requires multi-feature fusion:

- CSS/DOM component type:

[data-component-type="sp-sponsored-result"] - Label text:

.s-sponsored-label-text,[aria-label*="Sponsored"] - Container/context features: local context-based label decisions to avoid single-point misclassification.

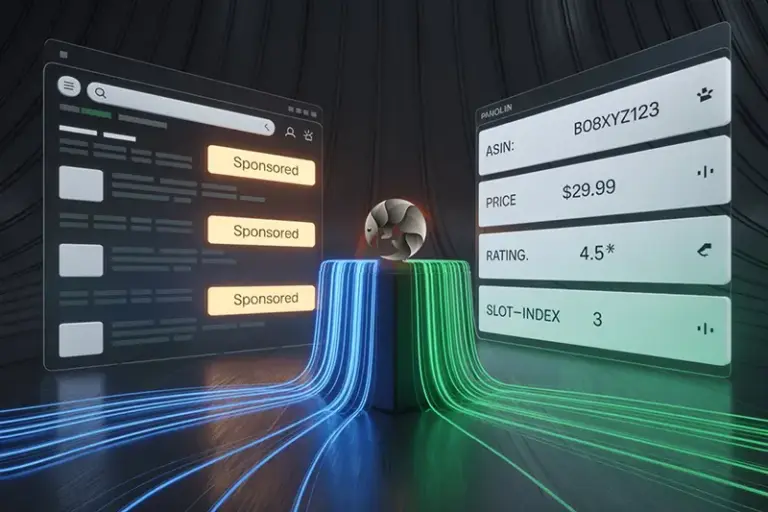

Once detected, perform structured extraction: ASIN, title, price, rating, reviews, seller, slot index, exposure region, etc.

3.3 Collection Loop and Quality Monitoring

- Multi-view resampling: Sample across time, geo, and viewport to raise coverage.

- Dedup/versioning: Deduplicate by ASIN and position; keep batch versions for backtracking.

- Automated regression: Validate after parser updates to prevent “fix-one-break-many.”

- SP ads coverage: ≈98% (multi-site and multi-language composite sampling)

- Misclassification rate: ≤2% (multi-feature fusion + post-sampling manual checks)

- Data freshness: minute-level

3.4 API and Example (Refer to official docs)

In production, use the API to unify structured outputs, avoiding maintenance overhead of in-house parsers. Example (endpoint/fields subject to docs):

curl --request POST \

--url https://scrapeapi.pangolinfo.com/api/v1/amazon/sponsored-ads/search \

--header 'Authorization: Bearer ' \

--header 'Content-Type: application/json' \

--data '{

"keyword": "wireless earbuds",

"marketplace": "US",

"formats": ["json"],

"bizContext": { "zipcode": "10041" },

"options": { "includeOrganic": false, "viewport": "desktop" }

}'

Response (excerpt):

{

"sponsored": [

{

"asin": "B0XXXXXXX",

"title": "Wireless Earbuds with Noise Cancellation",

"price": 49.99,

"rating": 4.5,

"reviews": 10234,

"seller": "BrandA",

"slot_index": 1,

"sponsored_label": true

},

{ "asin": "B0YYYYYYY", "slot_index": 2, "sponsored_label": true }

],

"meta": { "keyword": "wireless earbuds", "marketplace": "US", "geo": "10041" }

}

Note: The above is demonstration format; actual fields/endpoints can change by version. Please refer to Pangolin docs.

4. Effect Verification: Real-world Comparison

We ran a two-week comparison on 200 hot keywords across US/UK/DE, sampling different time windows and zip-code geos. Metrics (example):

- SP ads coverage: 98% (Pangolin) vs 65–75% (generic crawlers/non-vertical services)

- Misclassification rate: ≤2% (Pangolin) vs 5–12% (generic)

- Freshness: minute-level (Pangolin) vs 10–30 min (generic)

- Stability: sustained collection without blocking spikes over long periods (Pangolin)

We also evaluated “missing sponsored labels” and “delayed dynamic injection”: the former is reduced via multi-feature fusion, the latter mitigated via readiness checks and resampling, notably reducing timing-related misses.

5. Insights

- Treat collection as systems engineering: sampling design, quality loops, and anti-change capabilities are essential in dynamic systems.

- ROI first: In e-commerce verticals, in-house maintenance and opportunity costs are high; specialized APIs (e.g., Pangolin Scrape API) provide better cost-effectiveness and SLAs.

- Parameterization and reproducibility: clear sampling parameters (time window, geo, viewport, persona) ensure reproducible and interpretable comparisons.

- Compliance and governance: rate/frequency controls, logging, and versioning ensure long-term stable delivery.

If your core goal is sponsored slot monitoring and competitive intelligence, start with a specialized e-commerce API like Pangolin Scrape API to guarantee quality and freshness while reducing maintenance complexity—freeing resources for higher-value analysis and applications.