1. How Cross-Border E-commerce Relies on Data for Product Selection: First, Ask Yourself a Question

1.1 Why Has Product Selection Become a Hyper-Competitive Race to the Bottom?

In the past, product selection seemed like an arcane art. Most new sellers relied on various “magic” selection tools, best-seller lists, and crash courses to quickly list products. But by 2025, this method is no longer effective. The reason is simple—everyone is using the same set of tools.

When hundreds or thousands of sellers are using the same SaaS platform to analyze data, employing the same keyword tools, and copying the same listing strategies, your “exclusive hot product” has already become an oversaturated commodity.

1.2 Data is the Underlying Logic Behind Decisions

Top-tier sellers no longer rely on generic product selection tools. They know that the essence of product selection is a data-driven decision-making system, not blindly chasing trends.

These teams build product selection models that are more aligned with their own operations by using an Amazon product selection data scraping API, internal algorithms, and proprietary selection logic, thereby achieving true competitive differentiation.

2. What Key Data is Needed for Amazon Operational Decisions?

Before building your product selection logic and operational strategy, you first need to clarify—what data do you actually need to scrape?

2.1 Product Detail Page Data (including reviews, “Customer Says”)

- Title, subtitle, brand

- Description, variants, bullet points, A+ Content

- User rating trends, number of reviews

- “Customer Says” word frequency and sentiment analysis (positive/negative)

- Fluctuations in recently added/deleted reviews

This data reveals the true performance of a product and user feedback.

2.2 Keyword Rankings and Sponsored Ad Distribution

- Keyword rankings (organic + sponsored)

- Frequency of Sponsored Ad impressions

- Hourly changes in ad impressions

- Ratio of ads to organic results in search results

Tracking SP ad placements can reflect competitive intensity and traffic value.

2.3 Category Best-Seller and New Release Lists

- Trends of products on the Top 100 list

- Frequency of new product launches

- Price and sales trends

- Changes in “Movers & Shakers” lists

This helps identify trending categories and the rate of category updates.

2.4 Store Monitoring and Price Trend Analysis

- Changes in a store’s listed products

- Price fluctuations and repricing cycles

- Whether they employ SP ads and review manipulation strategies

This is suitable for competitor monitoring and modeling.

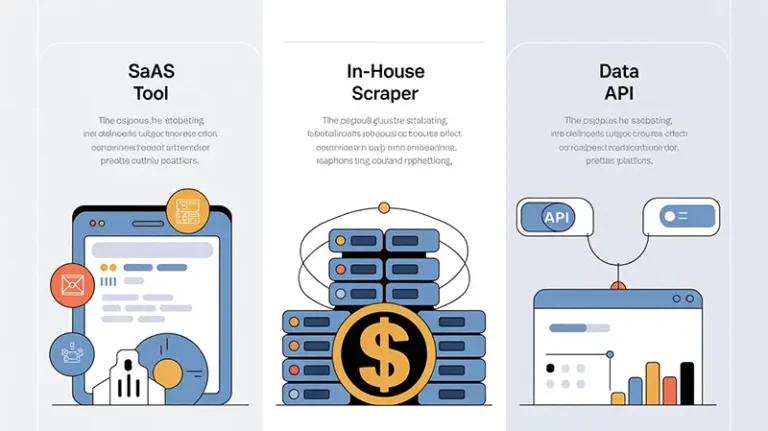

3. The Three Main Amazon Data Scraping Methods

3.1 SaaS Selection Tools: Standardized and Easy to Use, but Data is Limited

3.1.1 Advantages: Out-of-the-box, suitable for new sellers

The advantages of SaaS tools like Seller Spirit and JungleScout are:

- Quick to get started, clear interface

- Provide functions like keyword research, category lists, product analysis, and sales estimates

- Suitable for new or light-operation sellers with monthly sales under $100,000

3.1.2 Disadvantages: Many limitations, data lag, and inflexible functionality

- Low data scraping frequency, usually updated daily

- Cannot perform deep, batch scraping based on custom keywords or ASINs

- Advanced features often require extra payment

- Limited access counts and high monthly fees

3.2 In-house Scraper Teams: Highly Customized, High Maintenance Costs

3.2.1 Advantages: Strong control and customization capabilities

- Can design scraping workflows according to your own business processes

- More closely integrated with your own databases and selection logic

- Theoretically unlimited scalability

3.2.2 Disadvantages: High technical barrier, difficult to guarantee timeliness

- Extremely high costs for hiring scraper engineers, configuring proxy networks, and developing anti-bot solutions

- Amazon’s anti-scraping strategies are frequently updated, so the success rate of scraping cannot be guaranteed

- Large-scale scraping (e.g., daily updates of all products in a top category) is almost impossible to sustain

- High project maintenance costs, data becomes outdated quickly

3.3 Using a Data Scraping API: The Dual Advantage of Flexibility and Scale

Taking Pangolin as an example, its Scrape API + Data Pilot form a complete scraping solution.

3.3.1 Scrape API: Get Structured Data and HTML

- Supports scraping platforms like Amazon, Walmart, Shopee, eBay, etc.

- Get real-time HTML, structured data, and Markdown formats

- Can scrape reviews, “Customer Says,” Sponsored Ad placements, and category Top lists

- Supports tens of millions of page scrapes per day

3.3.2 Data Pilot: No-code, Visual Scraping, and Customized Exports

- Quickly configure scraping tasks by keyword, ASIN, or category

- Supports scraping by postal code, at scheduled times, or hourly

- Automatically generates Excel-formatted data ready for operations

- No code required; as simple as configuring a form

4. Comparative Analysis: Which Method is Right for You?

| Comparison Dimension | SaaS Tools | In-house Scraper | Data Scraping API (e.g., Pangolin) |

| Data Breadth | Fixed fields and pages | Scalable, but requires development | Full platform support, various page types |

| Data Depth | Simplified fields | Customizable | Covers all fields including reviews, ads, word frequency |

| Real-time Capability | Daily updates | Uncertain | Minute-level updates |

| Cost | High monthly subscription | High initial investment | Low marginal cost, flexible billing |

| Technical Barrier | None | High | Low to medium (depending on integration) |

| Customization | Low | High | High (supports parameters & custom scenarios) |

| Suitable For | New sellers | Large, tech-savvy sellers | Mature sellers / Tool service providers |

5. Why Big Sellers No Longer Rely on SaaS Selection Tools

5.1 They Have In-house Selection Logic and Pursue Differentiated Competition

For big sellers with monthly sales in the hundreds of thousands of dollars, the standardized data provided by SaaS tools can no longer meet their operational needs. They place more value on:

- Data Verifiability and Uniqueness: Data that not everyone can easily obtain.

- Deep Integration with Their Own Systems: Creating a closed loop of Data → BI System → Operational Decision.

- More Refined Selection Ideas: Making decisions based on dimensions like keyword traffic source distribution and review sentiment trends.

5.2 Using APIs and External Data to Build a Private Database

Big sellers are usually equipped with data analysts and developers. They use data scraping APIs to combine Amazon’s public data with off-site signals (like Google search trends, social media trends) to build their own data systems. This approach brings the following benefits:

- Creating exclusive product selection models

- Building private tag libraries and hot-word systems

- Achieving cross-platform product selection synergy (Amazon + Shopify + TikTok)

5.3 With Data in Hand, Decisions are More Proactive

Using an API is not just about simple scraping; it’s about giving sellers proactive control over their product selection rhythm, promotion timing, and inventory strategy.

They can achieve:

- Hourly monitoring of keyword sponsored placement changes to promptly discover competitor ad anomalies.

- Regularly obtaining newly added ASINs within a category and analyzing if new players are entering.

- Tracking review frequency and sentiment changes to gain insights into the product lifecycle.

6. How Pangolin Helps You Gain an Edge in Fine-tuned Amazon Operations

6.1 The Scraping Power and Customization of Scrape API

Pangolin’s Scrape API offers minute-level real-time scraping capabilities that are rare in the industry, supporting the following functions:

- Scrape any category, keyword, or ASIN page

- Full-field parsing, including product description, bullet points, variant information, reviews, sponsored positions, etc.

- Supports three data formats: HTML, structured JSON, and Markdown

- Allows setting parameters like postal code, time zone, language, and page number

Special advantages include:

- Sponsored Ad scraping success rate of over 98%, a leader in the industry.

- Can scrape “Customer Says” sentiment orientation + corresponding popular reviews.

- Best-seller list scraping supports filtering by price range, allowing you to select products first and then scrape details.

6.2 Data Pilot: Scrape Data Like Configuring a Workflow

Data Pilot is geared towards operations personnel, allowing them to complete data scraping without knowing how to code:

- Visually configure tasks (by keyword, ASIN, best-seller list, etc.)

- Generate operation-friendly Excel report formats

- Supports scheduled task execution, suitable for daily/hourly monitoring

- Comprehensive scraping dimensions, compatible with advanced functions like SP ads and postal code settings

- Suitable for collaborative use by operations, product selection, and content teams, completely lowering the data barrier.

6.3 A One-Stop Data Solution from Keywords to Categories

Pangolin provides a complete data chain for cross-border operators:

- Keyword Mining → Search Result Scraping → Ad Placement Monitoring

- Product Details → Review Semantic Analysis → Consumer Feedback Trends

- Store Tracking → Competitor New Launch/Repricing Strategy Identification

- Category Lists → Best-seller Trend Modeling → Popular Tag Identification

All of this can be achieved with just a few API calls or through Data Pilot’s configuration process.

7. Practical Application Case Studies: Driving Product Selection with Data

7.1 Analysis of SP Ad Distribution and Review Sentiment for Hot Keywords

A major seller uses the Pangolin API to scrape the search page for the keyword “wireless earbuds” every hour to analyze:

- Which products repeatedly appear in the top 10 positions?

- Which are sponsored ad placements? Is the scraping rate stable?

- What are the high-frequency words in the reviews for these products? Are they positive or negative?

Based on this, they determine if the traffic heat for that keyword is real and controllable, and whether it’s worth entering the niche.

7.2 Building an ASIN Database + Best-Seller Trend Monitoring

Another tool service provider built an ASIN data warehouse, using the Scrape API daily to scrape:

- Amazon’s Best Sellers list

- The number of variants, price changes, and ranking trends for each ASIN

- Combined with Google Trends data to evaluate cross-platform trends

Ultimately, they developed an AI product selection algorithm that helps their clients get leads on potential new products every day.

8. Frequently Asked Questions (FAQ)

8.1 How many technical resources are needed to build an in-house scraper?

Typically, you need at least one front-end scraping engineer and one data engineer to build a proxy pool, error retry logic, and a data cleaning system. The cost is high and maintenance is difficult.

8.2 Will the API be blocked by the platform? Is it stable?

Pangolin Scrape API has designed an anti-blocking architecture for various e-commerce platforms, using a distributed IP and multi-path strategy. It operates stably with tens of millions of requests per day, and its scraping success rate is a leader in the industry.

8.3 Which countries and postal codes does Pangolin support for scraping?

It supports major markets like the US, Canada, UK, Japan, etc. You can precisely control the scraping region via postal code to analyze regional differences and localized ads.

8.4 Can it scrape the word frequency of buyer reviews on Amazon?

It supports the automatic extraction of hot words from the “Customer Says” module, along with sentiment indicators (positive/negative). It can also locate the specific top-rated reviews corresponding to those hot words.

8.5 Does it support generating Excel sheets ready for operations?

Data Pilot can export custom-formatted Excel sheets with selectable fields and controllable headers, suitable for direct integration into daily/weekly operational reporting workflows.

8.6 How can I integrate API data into my BI system?

Pangolin provides a standardized JSON data structure and supports integration with mainstream BI tools like Tableau, Power BI, and Looker through Webhooks, scheduled pulls, and asynchronous pushes.

9. Conclusion: Using the Right Data Tool is More Important than Spending Money

In the new era of product selection and fine-tuned operations, your method of data acquisition determines the size of the world you can see.

- SaaS tools are suitable for sellers new to cross-border e-commerce. They are out-of-the-box but have a clear ceiling.

- In-house scrapers are suitable for companies with technical teams, but the costs are high and stability is poor.

- An Amazon product selection data scraping API like Pangolin’s finds the optimal balance between flexibility, timeliness, breadth, and cost-effectiveness.

It gives every operations team that understands the value of data the same competitive power as big sellers, allowing them to make faster, more accurate, and deeper decisions in every aspect of product selection, operations, and marketing.