Keywords for this article:How to Build an Amazon Product Monitoring System、Amazon Price Tracking System、Monitor Amazon Competitor Data、Python Amazon Monitoring Script、Real-time Amazon Product Data

The Ultimate Guide: How to Build a High-Availability Amazon Product Monitoring System from Scratch

For every seller, brand, and data analytics firm competing in the fast-paced digital battlefield of Amazon, building a monitoring system is not just a technical term—it’s a strategic necessity. Prices fluctuate in minutes, competitors sell out overnight, and the Buy Box ownership changes like a revolving door. In such a highly dynamic environment, manual page refreshing is futile. Automated, programmatic real-time monitoring is the only way to maintain a competitive edge.

This article is not a simple tool recommendation; it is a detailed technical blueprint. We will start with system architecture design, guiding you step-by-step through the process of building your own full-featured Amazon product monitoring system using a common tech stack (like Python, a database, and a task scheduler). We will also analyze why using a professional API for the most critical “data acquisition” stage is the smarter choice.

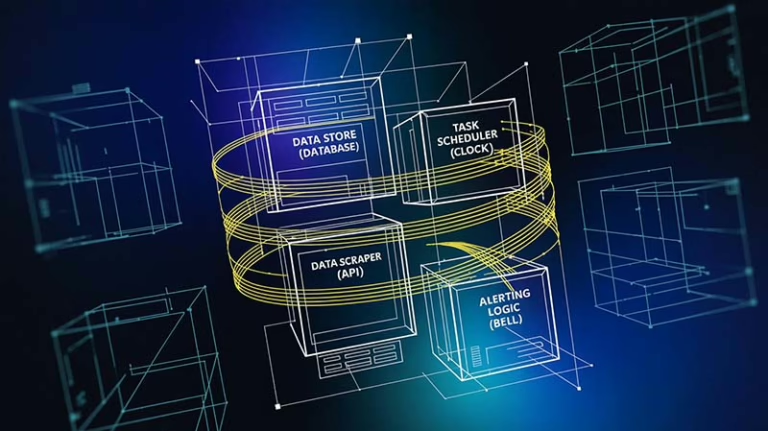

Chapter 1: Blueprint First: The Architecture of a Product Monitoring System

Before writing a single line of code, a clear architecture is the cornerstone of a successful project. A robust monitoring system can be broken down into four core components:

- Data Store: This is the memory of your system. We need a place to persist the list of products we care about and to log the historical data for every scrape. A relational database like

PostgreSQLorMySQLis an excellent choice. - Task Scheduler: The monitoring task needs to be executed automatically and periodically (e.g., once every hour). For simple scenarios, a Linux

cronjob can suffice. For more complex, high-availability systems, a professional scheduling framework likeCelery BeatorAPScheduleris more appropriate. - Data Acquisition Module (Scraper): This is the most complex and fragile part of the entire system. Its sole responsibility is to reliably fetch the latest, accurate product data (price, stock, etc.) from Amazon for a given ASIN. There are two paths to implementing this module: building it entirely yourself or using a third-party data collection API.

- Change Detection & Alerting Logic: After new data is collected, this module is responsible for comparing it with the previous version stored in the database. If a key field (like price) has changed, it will trigger a notification mechanism (e.g., sending an email, a Slack message, or calling another API).

Chapter 2: Laying the Foundation: Database and Scheduler Implementation

Let’s turn the blueprint into reality with code.

1. Database Schema Design

We need at least two tables: one to store the products to be monitored, and another to log their price history.

Products Table (products_to_monitor)

SQL

CREATE TABLE products_to_monitor (

id SERIAL PRIMARY KEY,

asin VARCHAR(20) UNIQUE NOT NULL,

url TEXT NOT NULL,

last_known_price DECIMAL(10, 2),

last_checked_at TIMESTAMP WITH TIME ZONE,

is_active BOOLEAN DEFAULT TRUE,

created_at TIMESTAMP WITH TIME ZONE DEFAULT NOW()

);

Price History Table (price_history)

SQL

CREATE TABLE price_history (

id SERIAL PRIMARY KEY,

product_id INTEGER REFERENCES products_to_monitor(id),

price DECIMAL(10, 2) NOT NULL,

scraped_at TIMESTAMP WITH TIME ZONE DEFAULT NOW()

);

2. Task Scheduler Implementation (Example with Python APScheduler)

APScheduler is a lightweight yet powerful Python library for scheduling tasks, perfect for running periodic jobs within an application.

Python

# scheduler_setup.py

from apscheduler.schedulers.blocking import BlockingScheduler

import time

def my_monitoring_task():

"""

This is a placeholder task representing the main monitoring logic

we want to execute periodically.

"""

print(f"Monitoring task is running... Time: {time.ctime()}")

# Here, we will eventually call our data acquisition and change detection logic.

# run_monitoring_logic()

# Create a scheduler instance

scheduler = BlockingScheduler()

# Add a job to run the my_monitoring_task function once every hour

scheduler.add_job(my_monitoring_task, 'interval', hours=1, id='amazon_monitor_job')

print("Scheduler has started. It will run the monitoring task every hour. Press Ctrl+C to exit.")

try:

scheduler.start()

except (KeyboardInterrupt, SystemExit):

pass

With this code, we’ve built the “skeleton” of our system—it knows what data to store and that it needs to “do something” every hour. Now, we need to add the most critical part: the data acquisition logic.

Chapter 3: Conquering the Hardest Part: Two Paths for the Data Acquisition Module

As mentioned, this is the most difficult section. Let’s explore two ways to implement it.

Path A: The Full DIY Path

Choosing this path means you will face all the challenges we’ve discussed in detail before:

- Anti-Scraping Warfare: You’ll need to procure and manage a massive residential proxy pool, integrate with CAPTCHA solving services, and meticulously mimic browser fingerprints to avoid being blocked.

- Parsing & Maintenance: You’ll have to write complex parsers for different page types on Amazon and be on constant alert, as Amazon can update its front-end code at any time, breaking your parsing rules and requiring emergency fixes.

- Handling JavaScript Rendering: For pages that heavily use JavaScript to load content dynamically, you must introduce headless browser technologies like Selenium or Playwright, which dramatically increases server resource consumption and system complexity.

Conclusion: Building everything yourself gives you full control, but it’s a bottomless pit of R&D investment that requires a dedicated team to maintain.

Path B: The Smart Shortcut with a Professional Data Collection API

The philosophy of this path is: Outsource the dirtiest, most tedious work (“data acquisition”) so you can focus on the most valuable work (“data application”).

Instead of building a massive scraper cluster yourself, you can call a professional Scrape API. It handles all the underlying challenges—proxies, CAPTCHAs, browser rendering, parsing, maintenance—in the cloud and provides you with stable, reliable, structured data through a simple API interface.

Here is an example of how to integrate the Pangolin Scrape API into our monitoring system to replace the DIY scraper:

Python

# data_acquirer.py

import requests

import os

# It's more secure to read the API key from environment variables or a config file

API_TOKEN = os.getenv("PANGOLIN_API_TOKEN")

API_BASE_URL = "http://scrapeapi.pangolinfo.com/api/v1"

def get_product_data_with_scrapeapi(asin: str, zipcode: str = "10041") -> dict:

"""

Fetches product data for a single ASIN using the Pangolin Scrape API.

"""

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {API_TOKEN}"

}

payload = {

"url": f"https://www.amazon.com/dp/{asin}",

"formats": ["json"],

"parserName": "amzProductDetail", # Use the preset parser for Amazon product details

"bizContext": {

"zipcode": zipcode

}

}

try:

response = requests.post(API_BASE_URL, headers=headers, json=payload, timeout=60)

response.raise_for_status()

api_response = response.json()

if api_response.get("code") == 0:

# Assuming the parsed JSON data is in data['json'][0]

# Note: This path might need adjustment based on the actual API response structure

parsed_data = api_response.get("data", {}).get("json", [None])[0]

if parsed_data:

return parsed_data

else:

print(f"API returned success but failed to parse JSON data for ASIN: {asin}")

return None

else:

print(f"API call error for ASIN {asin}: {api_response.get('message')}")

return None

except requests.exceptions.RequestException as e:

print(f"Network error during API request for ASIN {asin}: {e}")

return None

With this get_product_data_with_scrapeapi function, our data acquisition module becomes incredibly simple, stable, and powerful.

Chapter 4: Tying It All Together: The Complete Monitoring Logic

Now, let’s integrate all the components to build the core logic of our monitoring system. We will modify the scheduler code from Chapter 2 to execute the real monitoring task. This is a complete Python Amazon Monitoring Script.

Python

# main_monitor.py

from apscheduler.schedulers.blocking import BlockingScheduler

from data_acquirer import get_product_data_with_scrapeapi # Import our data acquisition function

import database_connector # Assume this is a module you've written to connect to your database

def main_monitoring_task():

print("Starting main monitoring task...")

# 1. Get all active products to monitor from the database

products = database_connector.get_active_products()

for product in products:

asin = product['asin']

last_price = product['last_known_price']

print(f"Checking ASIN: {asin}...")

# 2. Get the latest product data using the Scrape API

current_data = get_product_data_with_scrapeapi(asin)

if not current_data:

print(f"Could not retrieve data for ASIN: {asin}. Skipping this check.")

continue

# 3. Change Detection: Compare prices

# Note: The field name 'price' must match the key in the JSON response from the API

current_price = current_data.get('price')

if current_price and current_price != last_price:

print(f"!!! Price Change Alert for ASIN: {asin} !!!")

print(f"Old Price: {last_price}, New Price: {current_price}")

# 4. Trigger Notification (here we just print, in a real app you'd call an email/Slack API)

# send_alert(f"ASIN {asin} price changed from {last_price} to {current_price}")

# 5. Update the database

database_connector.update_product_price(asin, current_price)

database_connector.log_price_history(asin, current_price)

else:

print(f"ASIN: {asin} price has not changed.")

# Update the last_checked_at timestamp even if there's no price change

database_connector.update_last_checked_time(asin)

print("Main monitoring task finished.")

# --- Scheduler Setup ---

scheduler = BlockingScheduler()

scheduler.add_job(main_monitoring_task, 'interval', hours=1, id='amazon_monitor_job')

print("Monitoring system has started and will run every hour.")

scheduler.start()

At this point, we have a functional prototype of a monitoring system. It can automatically and periodically check product prices and take action when a change is detected.

Conclusion: Focus on Value, Not on Reinventing the Wheel

As we’ve explored in this guide, the core challenge in building an Amazon Product Monitoring System lies in stable and reliable data acquisition. The path of building everything from scratch is feasible, but it demands that you invest immense resources into solving a series of technical problems that are unrelated to your core business.How to Build an Amazon Product Monitoring System。

A wiser engineering practice is to decouple complex systems and delegate specialized tasks to professional tools. In a monitoring system, outsourcing the most difficult and maintenance-heavy component—data acquisition—to a mature third-party service like the Pangolin Scrape API frees your team from the tedious work of “reinventing the wheel.” This allows you to focus 100% of your valuable R&D and operational resources on what truly matters: analyzing the data, understanding the market, and ultimately, driving business growth.

To learn more about the API’s capabilities, you can consult our detailed User Guide and start your journey toward efficient data monitoring.